McCarthy’s definition characterized AI as ‘the science and engineering of making intelligent machines’[1]. As per Webster’s Dictionary (1913), the term explainable means ‘capable of being explained or made plain to the understanding; capable of being interpreted’. Explainable Artificial Intelligence (XAI or explainable AI) can be defined as ‘artificial intelligence that can document how specific outcomes were generated in such a way that ordinary humans can understand the process’[2].

This principle, enshrined in a multitude of ethical guidelines at both the national and global levels, seeks to address the fundamental issue of comprehending the decision-making processes of AI algorithms. Further, explainable AI provides a pathway to uncover potential biases, granting developers and users the ability to rectify them. Explainability can be perceived as an element of transparency, encompassing an understanding of ‘that and how’ something happens (for instance, the utilization of AI) and ‘how’ the cognitive-decisional-executive process involving AI unfolds[3]. For instance, explainable AI should possess the capability to elucidate ‘why a specific solution was derived’, ‘how a specific solution was arrived at’, and ‘when a particular AI-based system may encounter failure’. In a way, explainable AI techniques provide a way to gain a better understanding of the AI model’s decisions, and as explainable AI becomes increasingly popular, understanding how to use these techniques to better explain AI decisions will become increasingly important.

Two distinct categories of AI/ machine learning models emerge based on their interpretability to humans i.e., white-box models and black-box models. White-box models possess transparent structures that allow human observers to comprehend the underlying logic guiding the model’s decisions. Examples of such models include decision trees, logistic regression, and various rule-based systems. In contrast, black-box models exhibit intricate structures that yield less intelligible connections between input and output. Examples of such models include neural networks, random forests, and ensemble models. The growing interest in model interpretability is closely linked to the ascent of black-box models, causing practitioners to be concerned about their opacity. However, white-box models can also present challenges, as their transparent structure can become complex. Linear models with a multitude of variables, expansive trees with numerous nodes, and rule-based systems with extensive conditions can all pose their own set of interpretability challenges for humans[4].

Further, explainable AI techniques can be broadly categorized into two distinct groups: ante-hoc methods and post-hoc methods. The concept behind ante-hoc techniques revolves around the direct adoption of machine learning models inherently known for their interpretability, such as decision trees and explainable neural networks. However, they come with a limitation – they are confined to a predefined list of models that are considered inherently interpretable. Most of these models, however, tend to exhibit lower predictive performance when compared to more complex machine learning models, such as deep neural networks[5]. On the other hand, post-hoc techniques can be applied to virtually any machine learning algorithm since they generate explanations after a model has undergone training. Hence, post-hoc techniques have gained prominence due to their model-agnostic nature[6],[7].

In many applications, an explanation of how an answer was obtained is crucial for ensuring trust and transparency. In an era where AI’s influence on society steadily expands and regulatory frameworks grow more stringent, the need for transparency and accountability has increased. This significance is particularly pronounced in light of the noxious algorithms, which empirical studies have demonstrated to exhibit bias against specific racial demographics. In the event that these flawed algorithms were to be supplanted by their counterparts, equally deleterious in their impact, the vestiges of their influence would likely endure even longer or potentially be exacerbated by subsequent iterations characterized by comparable biases[8].

Various questions do arise like – Does it refer to faith in a model’s performance[9], robustness, or to some other property of the decisions it makes? Does interpretability simply mean a low-level mechanistic understanding of our models? If so does it apply to the features, parameters, models, or training algorithms? The legal notion of a right to explanation offers yet another lens on interpretability.[10]

In the following paragraphs, legal implications/ considerations with respect to explainable AI have been discussed with respect to different laws on a high-level basis:

i) Data privacy laws:

Data privacy is a major legal concern when it comes to explainable AI. In order for explainable AI to deliver insightful explanations for its decision-making processes, it is imperative that the system remains informed of the data it employs in shaping these decisions. This dataset encompasses user information which must be safeguarded to ensure explianable AI’s compliance with stringent data privacy regulations.

In the below paragraphs, relevant parts from the General Data Protection Regulation (GDPR) are analyzed:

Article 5(1)(a) GDPR states that Personal data shall be ‘processed lawfully, fairly and in a transparent manner in relation to the data subject (‘lawfulness, fairness and transparency’)’.

Article 22 of the GDPR regulations state that the ‘The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her’.

According to Article 22(3) GDPR, ‘the data controller shall implement suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests, at least the right to obtain human intervention on the part of the controller, to express his or her point of view and to contest the decision’.

A number of academic scholars have construed the GDPR as mandating that data controllers, who arrive at decisions regarding individuals based ‘solely on automated processing’, furnish these individuals with meaningful information on the logic involved in automated decision-making[11]. However, the extent to which this information/ explanation needs to be accurate or comprehensive remains unclear.

One would think, in consonance with Article 13(2)(f) of the GDPR, and in tandem with its corresponding Article 14(2)(g), the data controller is obliged to provide the individual with comprehensive information pertaining to the existence of automated decision-making processes. This encompasses profiling, as elucidated in Article 22(1) and (4) of the GDPR. In instances where such automated processes come into play, it becomes paramount to furnish the data subject with illuminating insights into the underlying rationale. Moreover, there is a necessity to expound upon the significance and the foreseeable ramifications of such data handling for the individual[12].

Other considerations:

As we delve into the intricacies of algorithmic governance, two pivotal rights come to the forefront: the ‘right to explanation’ and the ‘right to be forgotten.’ The former empowers individuals to demand a transparent and actionable account of algorithmic decisions. Meanwhile, the latter bestows upon them the authority to request the erasure of their data from an organization’s vast data repositories and intricate models. Thus, enforcing the right to be forgotten could require model updates which may invalidate previously provided explanations, thus violating the right to explanation[13].

In the eyes of legal scholars and data protection enforcement authorities, (CNIL 2017 and ICO 2017), the realm of AI presents substantial and formidable hurdles to privacy and data protection. This emerging technology, apart from its impact on various other facets, raises profound concerns in the realms of privacy and data security[14].

These considerations encompass a spectrum of issues, including the necessity of obtaining informed consent, concerns about surveillance[15], and potential violations of individuals’ data protection rights. These rights encompass the right to access personal data, the right to prevent processing that may result in harm or distress, and the right to avoid decisions solely driven by automated processes, among others. Wachter & Mittelstadt[16] have raised significant apprehensions regarding algorithmic accountability. They emphasize the limited control and oversight individuals have over the utilization of their personal data for drawing inferences about them. To address this accountability gap, they advocate for the establishment of a new data protection right – the ‘right to reasonable inferences’. This right aims to curtail the risks associated with ‘high-risk inferences’, which are characterized by their invasiveness, potential harm to one’s reputation, and a lack of verifiability, often relying on predictive or opinion-based assessments.[17]

ii) Human rights laws:

In an increasingly digital world, the intersection of human rights and AI has become a pressing concern. As technology continues to advance at an unprecedented pace, it’s crucial to examine how AI systems impact our fundamental rights and freedoms. Achieving a balance between the potential benefits of AI and the protection of human rights is an ongoing endeavor that requires vigilance, collaboration, and ethical considerations. In the below paragraphs, explainable AI is analyzed from the perspective of the European Convention of Human Rights (ECHR) and EU Charter –

Article 1 of Protocol No.1 of the ECHR states that[18]:

‘Every natural or legal person is entitled to the peaceful enjoyment of his possessions. No one shall be deprived of his possessions except in the public interest and subject to the conditions provided for by law and by the general principles of international law.

The preceding provisions shall not, however, in any way impair the right of the State to enforce such laws as it deems necessary to control the use of property in accordance with the general interest or to secure payment of taxes or other contributions or penalties.’

Article 6 of the ECHR and the Ethics Guidelines establish a definitive benchmark for the proper procedural framework. Among a myriad of imperatives, these standards mandate the adoption of a transparent and equitable procedure, culminating in a well-reasoned judgment. The compelling necessity to streamline the intricacies of the judicial process thus demands a comprehensive and transparent rationale, promising an impartial and level playing field for all parties embroiled in legal proceedings.[19]

In the realm of Article 6, the concept of fairness takes on a nuanced definition. It does not imply that the case’s verdict must universally align with everyone’s notion of fairness, for such a determination can vary greatly among involved parties. Fairness, in this context, carries a more formal and precise connotation. It signifies that the case must be overseen by an impartial tribunal at the national level, ensuring that all parties involved possess equal rights. Those accused must be fully aware of the charges against them, granted access to legal assistance, and provided with an interpreter if they are not proficient in the language of the legal proceedings. Failure to uphold any of these entitlements could lead to the ECHR identifying a violation of Article 6. Consequently, the primary focus is on procedural fairness rather than distributive fairness, emphasizing the importance of due process.

Article 13 ECHR: Right to an effective remedy states ‘Everyone whose rights and freedoms as set forth in this Convention are violated shall have an effective remedy before a national authority notwithstanding that the violation has been committed by persons acting in an official capacity’. The question arises what would be an effective remedy for AI matters here?

Article 14 ECHR: Prohibition of discrimination states ‘The enjoyment of the rights and freedoms set forth in [the] Convention shall be secured without discrimination on any ground such as sex, race, colour, language, religion, political or other opinion, national or social origin, association with a national minority, property, birth or other status’. The question arises bias that arises in machine learning/ AI models?

Article 41 of the EU Charter on Right to good administration states

1. Every person has the right to have his or her affairs handled impartially, fairly and within a reasonable time by the institutions, bodies, offices, and agencies of the Union.

2. This right includes:

(a) the right of every person to be heard, before any individual measure which would affect him or her adversely is taken;

(b) the right of every person to have access to his or her file, while respecting the legitimate interests of confidentiality and of professional and business secrecy;

(c) the obligation of the administration to give reasons for its decisions.

3. Every person has the right to have the Union make good any damage caused by its institutions or by its servants in the performance of their duties, in accordance with the general principles common to the laws of the Member States.4. Every person may write to the institutions of the Union in one of the languages of the Treaties and must have an answer in the same language.

The question arises as to what extent AI/ explainable AI models can be fair and what is fairness in the context of explainable AI.

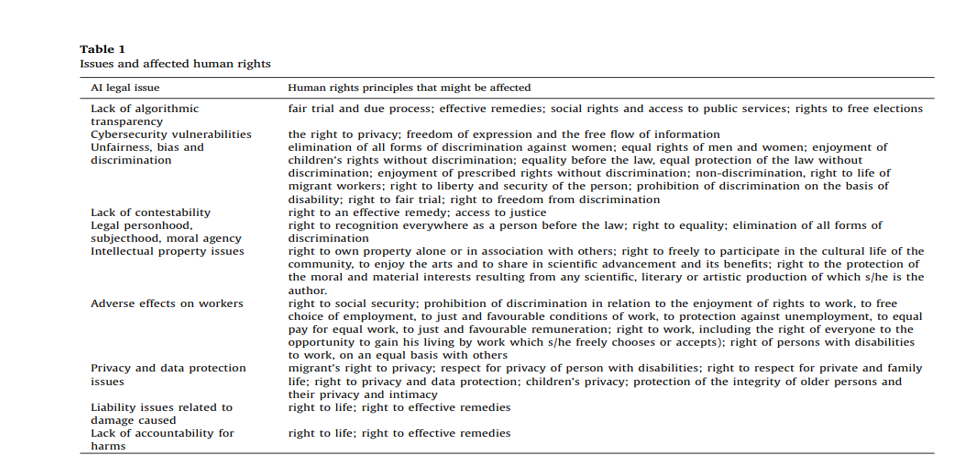

Within the following list of ten issues raised by Rodrigues[20], some pertain to the very essence and composition of artificial intelligence itself, and these will be addressed first. The remaining issues delve into the intricate realm of AI’s implementation and utilization, where the AI’s design often plays a significant role in either causing or enabling these challenges. It’s important to recognize that these issues are not confined to a single domain; they have the potential to manifest across various sectors and fields of application. Many of these concerns are shared with other technological advancements, such as privacy and data protection, while others, like transparency, fairness, and accountability, are deeply intertwined and cannot be viewed in isolation. Nevertheless, we must never underestimate AI’s capacity to amplify or facilitate the adverse consequences associated with these issues. Those are mentioned below:

Figure 5, Source: Rowena Rodrigues, Legal and human rights issues of AI: Gaps, challenges and vulnerabilities. Journal of Responsible Technology 4. (2020). 100005. 10.1016/j.jrt.2020.100005.

Ensuring the accuracy and completeness of the data inputted into AI systems is paramount, with the goal of aligning AI-based outcomes as closely as possible with existing legal practices. Various experts in the field recommend the implementation of additional safeguards during the utilization phase of AI, including certification mechanisms and auditing. However, it is important to note that any official expansion of AI usage in the realm of justice should follow the establishment of comprehensive legal regulations governing AI and the allocation of responsibilities among various stakeholders involved in the decision-making process that incorporates AI.

iii) Artificial intelligence laws:

The European Union’s AI legislation represents a comprehensive framework meticulously crafted to govern the entire spectrum of AI systems, from their development to deployment and impact. This multifaceted regulation distinguishes AI systems by varying levels of risk (risk-based assessment approach), classifying them into distinct categories to ascertain the corresponding regulatory obligations. Furthermore, the legislation places a strong emphasis on transparency, mandating that AI systems provide clear and comprehensive information to users concerning their capabilities, limitations, and any potential biases they might exhibit. In essence, the EU AI Act underscores the European Union’s unwavering commitment to overseeing AI technologies in a manner that prioritizes safety, transparency, and the preservation of fundamental rights.[21]

The EU AI Act does not state explicitly the requirement of AI explainabiliy. It is used though once used in Recital 38 – ‘Classification of High-Risk Systems for Law Enforcement Authorities’ as stated under:

‘…Furthermore, the exercise of important procedural fundamental rights, such as the right to an effective remedy and to a fair trial as well as the right of defence and the presumption of innocence, could be hampered, in particular, where such AI systems are not sufficiently transparent, explainable and documented….’

The concept of ‘transparency’ finds frequent mention within the AI Act. It appears a total of fifteen times throughout the document, with nine occurrences in the recitals, seven of which are within the context we are currently discussing. Within the provisions themselves, the term ‘transparency’ is utilized six times. If we exclude the title of Article 13 of the AI Act, which is ‘Transparency and Provision of Information to Users’, the term appears five more times[22].

Transparency is not the same as explainability. Quoting some of the relevant articles:

Article 14(1) of the EU AI Act provides that,

‘High-risk AI systems shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which the AI system is in use’

Article 14(2) of the EU AI Act provides that,

‘Human oversight shall aim at preventing or minimising the risks to health, safety or fundamental rights that may emerge when a high-risk AI system is used in accordance with its intended purpose or under conditions of reasonably foreseeable misuse, in particular when such risks persist notwithstanding the application of other requirements set out in this Chapter’

From a synthesis of these established benchmarks, we can derive two pivotal conclusions. Firstly, for an AI user to effectively wield the system, a comprehensive understanding of the AI’s inner workings, or more precisely, the outcomes it produces, is imperative. Secondly, the European Union legislator finds solace in the empirical elucidation mentioned earlier. These deductions find substantiation within the functional delineation of human oversight protocols delineated in Article 14(4) of the EU AI Act[23].

Furthermore, a majority of the prerequisites for AI transparency stem from the reference made in Article 13(1) of the AIA to the provisions expounded upon in Chapter 3 of Title III of the AIA, specifically, Articles 16 through 29 of the AIA. Articles 16 through 28 of the AIA delineate the stipulations that AI providers must adhere to. From the vantage point of AI transparency in its most expansive sense, the pertinent requisites in this context encompass the obligation to generate comprehensive technical documentation, maintain an automated log of occurrences, implement a rigorous risk management system, establish a robust quality management system, subject AI systems to a thorough conformity assessment procedure, address non-conformities promptly, and notify governmental authorities regarding instances of non-conformity. While these prerequisites do not explicitly guarantee transparency or elucidate the comprehensibility of AI’s performance, they should, nonetheless, facilitate empirical verification of the correctness of AI’s operations and the identification of causes behind AI’s malfunctions. Hence, they may, to a certain extent, contribute to the attainment of a specific level of transparency[24].

Various nations have implemented their distinct ‘explainability’ mandates. Take, for instance, France, which, under its Digital Republic Act, grants individuals the entitlement to receive explanations for algorithmic decisions made by administrative authorities concerning them.

iv.) Intellectual property laws:

XAI is also subject to various intellectual property laws. As XAI technology evolves, it is important to ensure that the intellectual property related to the technology is properly protected. Additionally, the legal implications associated with XAI’s use of advanced algorithms and machine learning must be considered in order to ensure that organizations are not infringing on any intellectual property rights. XAI systems may include open-source components as well as proprietary elements. Companies should consider the various licensing options available, as well as the potential risks associated with certain licenses. This means that there are still significant challenges that need to be overcome before XAI can be used widely. Some of these include creating effective methods for interpreting AI-generated results, ensuring that AI is used ethically and responsibly, and developing protocols and standards to ensure accuracy.

v.) Ethical concerns:

The ethical implications of AI in dispute resolution cannot be underestimated. Ensuring that AI systems are designed and programmed to adhere to ethical guidelines and legal standards is an ongoing challenge. Striking the right balance between automation and human oversight is essential to avoid ethical dilemmas.

A high-level expert group on Artificial Intelligence set up by the Euroean Commission issued a report ‘Ethics Gudielines For Trustworthy AI’.

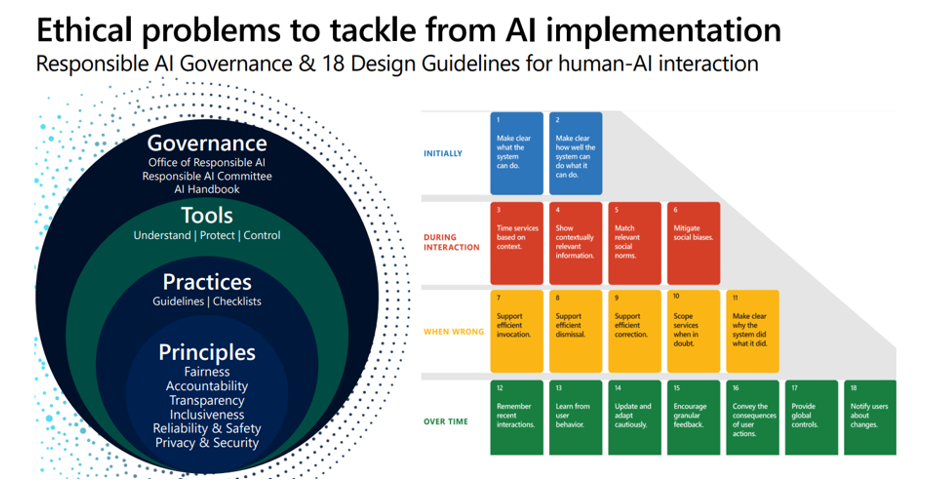

Figure 6: https://op.europa.eu/en/publication-detail/-/publication/d3988569-0434-11ea-8c1f-01aa75ed71a1#

Per the report, ‘Trustworthy AI has three components, which should be met throughout the system’s entire life cycle: (1) it should be lawful, complying with all applicable laws and regulations (2) it should be ethical, ensuring adherence to ethical principles and values and (3) it should be robust, both from a technical and social perspective since, even with good intentions, AI systems can cause unintentional harm’.

If we go by this, then we can say that the AI models should be explainable from the very beginning and a post-hoc explanations method of XAI may not fall in the category of Trustworthy AI.

Figure 7: Ethical problems to tackle from AI implementation, Source: Valentina Ion, Responsible AI – Co-innovation with Government e-Invoicing Anomaly Detection : learnings (slides); available at https://actl.uva.nl/content/events/2023/03/towards-explainable-artificial-intelligence-xai-in-taxation-cpt-conference.html?cb

Devotion to legality, resilience, and ethical integrity takes center stage. The European Commission has taken upon itself the responsibility of elucidating the guiding interests and objectives that underpin the design of algorithms.[25]

It is yet to be seen how this shall evolve in the coming timing times and whether there will be any scope for global harmonization in this direction.

[1] John McCarthy, What is Artificial Intelligence? (2004)

[2] Margaret Rouse, Explainable AI (XAI), Techopedia (2022); available at https://www.techopedia.com/definition/33240/explainable-artificial-intelligence-xai

[3] Maciej Gawroński, Explainability of AI in Operation – Legal aspects; available at https://www.linkedin.com/pulse/explainability-ai-operation-legal-aspects-maciej-gawro%C5%84ski/

[4] Sungsoo Ray Hong et al., Human Factors in Model Interpretability: Industry Practices, Challenges, and Needs; available at https://arxiv.org/abs/2004.11440

[5] Miklós Virág et.al, Is there a trade-off between the predictive power and the interpretability of bankruptcy models? The case of the first Hungarian bankruptcy prediction model; available at https://econpapers.repec.org/article/akaaoecon/v_3a64_3ay_3a2014_3ai_3a4_3ap_3a419-440.htm

[6] Marco Ribeiro et al., ‘Why Should I Trust You?’: Explaining the Predictions of Any Classifier (2016)

[7] Chanyuan (Abigail) Zhang et. al, Explainable Artificial Intelligence (XAI) in Auditing; available at (Aug 1, 2022). International Journal of Accounting Information Systems, Available at SSRN: https://ssrn.com/abstract=3981918 or http://dx.doi.org/10.2139/ssrn.3981918

[8] Zachary C. Lipton, The Mythos of Model Interpretability; available at https://arxiv.org/abs/1606.03490

[9] Ribeiro, Marco et. al., “Why Should I Trust You?”: Explaining the Predictions of Any Classifier (2016)

[10] Zachary C. Lipton, The Mythos of Model Interpretability; available at https://arxiv.org/abs/1606.03490

[11] Sandra Wachter et. al., Why a Right to Explanation of Automated Decision-Making Does Not Exist in the General Data Protection Regulation, 7 Int’l Data Privacy L. 76, 77, 79–90 (2017)

[12] Maciej Gawroński, Explainability of AI in Operation – Legal aspects; available at https://www.linkedin.com/pulse/explainability-ai-operation-legal-aspects-maciej-gawro%C5%84ski/

[13] Satyapriya Krishna et. Al, Towards Bridging the Gaps between the Right to Explanation and the Right to be Forgotten, available at https://arxiv.org/abs/2302.04288

[14] S Gardner, AI poses big privacy and data protection challenges; available at https://www.bna.com/artificial-intelligence-poses-n57982079158/

[15] M Brundage M (2018) The malicious use of artificial intelligence: forecasting, prevention, and mitigation. Available at https://arxiv.org/pdf/1802.07228.pdf&sa=D&ust=1550739471109000.

[16] S Wachter et. al.; A right to reasonable inferences: Re-thinking data protection law in the age of Big Data and AI; Columbia Business Law Review (2019); available at https://ora.ox.ac.uk/objects/uuid:d53f7b6a-981c-4f87-91bc-743067d10167/download_file?file_format=pdf&safe_filename=Wachter%2Band%2BMittelstadt%2B2018%2B-%2BA%2Bright%2Bto%2Breasonable%2Binferences%2B-%2BVersion%2B6%2Bssrn%2Bversion.pdf&type_of_work=Journal+article

[17] Rowena Rodrigues, Legal and human rights issues of AI: Gaps, challenges and vulnerabilities, Journal of Responsible Technology,Volume 4,2020,100005,ISSN 2666-6596,https://doi.org/10.1016/j.jrt.2020.100005.

[18] Article 1, Protocol1: Portection of property; available at https://www.equalityhumanrights.com/en/human-rights-act/article-1-first-protocol-protection-property#:~:text=Protocol%201%2C%20Article%201%3A%20Protection,general%20principles%20of%20international%20law.

[19] A. D. (Dory) Reiling, ‘Courts and Artificial Intelligence’ (2020) 11(2) International Journal for Court Administration 8. DOI: https://doi.org/10.36745/ijca.343

[20] Rowena Rodrigues, Legal and human rights issues of AI: Gaps, challenges and vulnerabilities. Journal of Responsible Technology 4. (2020). 100005. 10.1016/j.jrt.2020.100005.

[21] Nupur Jalan, Evolving Artificial Intelligence Laws Around The World(Part 1); https://nupurjalan.com/evolving-artificial-intelligence-laws-around-the-world-part-1/; available at https://nupurjalan.com/evolving-artificial-intelligence-laws-around-the-world-part-1/

[22] Maciej Gawroński, Explainability of AI in Operation – Legal aspects; available at https://www.linkedin.com/pulse/explainability-ai-operation-legal-aspects-maciej-gawro%C5%84ski/

[23] Ibid

[24] Ibid

[25] A. D. (Dory) Reiling, ‘Courts and Artificial Intelligence’ (2020) 11(2) International Journal for Court Administration 8. DOI: https://doi.org/10.36745/ijca.343

The views in all sections are personal views of the author.