Artificial intelligence (AI) has become an integral part of our lives, revolutionizing industries, and transforming the way we work and interact. However, the rapid advancement of AI technologies has brought to light the need for legal and regulatory frameworks to govern their development and deployment. Without adequate regulation, AI systems may pose risks such as discriminatory decision-making, privacy infringements, and safety concerns.

Several jurisdictions are taking some or other measures to regulate AI. Some of these will be discussed in this series of blogs. This part aims to cover the EU AI law.

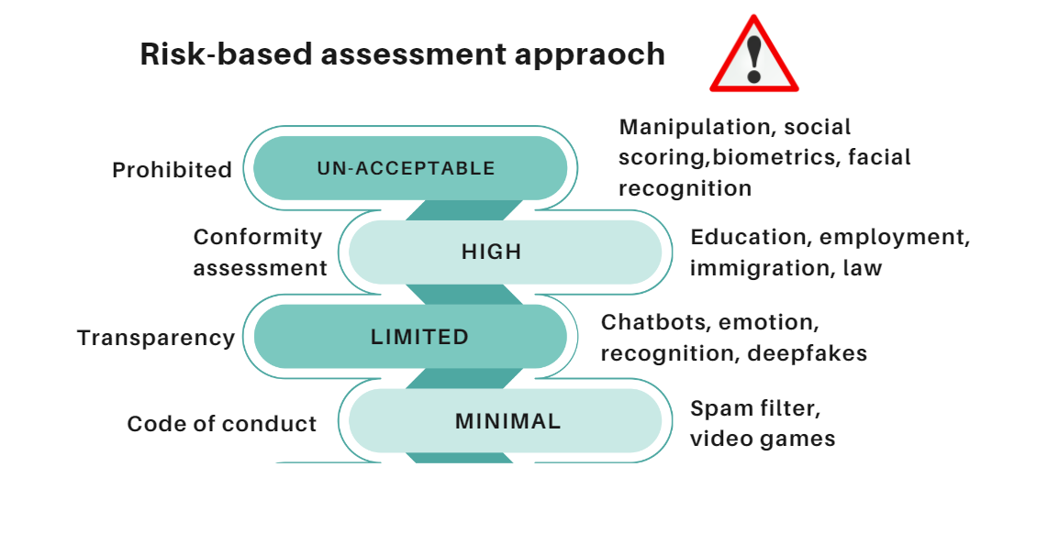

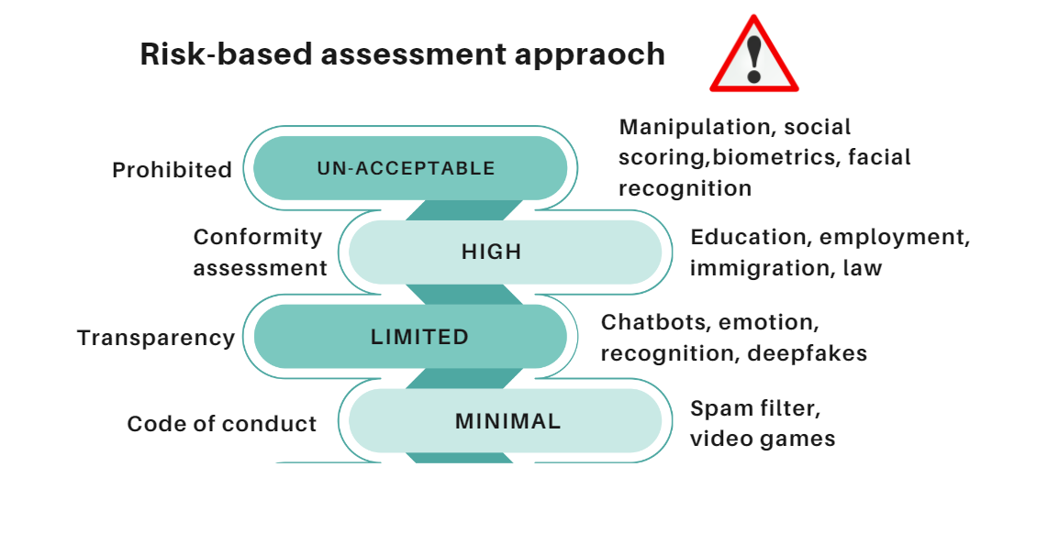

The EU AI law provides a comprehensive framework designed to govern the development, deployment, and impact of AI systems. It distinguishes between AI systems with varying levels of risk and impact, categorizing them into different classes to determine the extent of regulatory requirements. Further, the law emphasizes the need for transparency by requiring that AI systems provide clear information to users regarding their capabilities, limitations, and any potential biases. The risk-based assessment approach for regulating AI is shown in the diagram below[1]:

A brief discussion on the different risk categories:

1. Unacceptable risks:

Unacceptable risks are those that stand as a threat to the safety, livelihoods, and fundamental rights of the people. Further, those risks that can manipulate the behavior of the individual are also said to be unacceptable and are prohibited to use. Article 5[2] lays down further guidance on ‘Prohibited Artificial Intelligence Practices’. Relevant extracts from the same are quoted below for reference:

“1.The following artificial intelligence practices shall be prohibited:

(a) the placing on the market, putting into service or use of an AI system that deploys subliminal techniques beyond a person’s consciousness in order to materially distort a person’s behaviour in a manner that causes or is likely to cause that person or another person physical or psychological harm;

(b) the placing on the market, putting into service or use of an AI system that exploits any of the vulnerabilities of a specific group of persons due to their age, physical or mental disability, in order to materially distort the behaviour of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm;

(c) the placing on the market, putting into service or use of AI systems by public authorities or on their behalf for the evaluation or classification of the trustworthiness of natural persons over a certain period of time based on their social behaviour or known or predicted personal or personality characteristics, with the social score leading to either or both of the following:

(i) detrimental or unfavourable treatment of certain natural persons or whole groups thereof in social contexts which are unrelated to the contexts in which the data was originally generated or collected;

(ii) detrimental or unfavourable treatment of certain natural persons or whole groups thereof that is unjustified or disproportionate to their social behaviour or its gravity;

(d) the use of ‘real-time’ remote biometric identification systems in publicly accessible spaces for the purpose of law enforcement, unless and in as far as such use is strictly necessary for one of the following objectives:

(i) the targeted search for specific potential victims of crime, including missing children;

(ii) the prevention of a specific, substantial and imminent threat to the life or physical safety of natural persons or of a terrorist attack;

(iii) the detection, localisation, identification or prosecution of a perpetrator or suspect of a criminal offence referred to in Article 2(2) of Council Framework Decision 2002/584/JHA 62 and punishable in the Member State concerned by a custodial sentence or a detention order for a maximum period of at least three years, as determined by the law of that Member State….”

2. High risk

High risk includes systems that may lead to critical infrastructure which makes life or health at risk and that require to have control of the management, systems for migration, and that intervene in people’s rights.

To ensure compliance with the EU AI law, the regulation introduces a conformity assessment process for high-risk AI systems. This process involves evaluating the system’s compliance with the requirements outlined in the law, conducting tests and inspections, and issuing a conformity certificate. The certification serves as evidence that the AI system meets the necessary standards of safety, transparency, and accountability.

Article 6[3] lays down the classification rules for high-risk AI systems. Relevant extracts are quoted below:

“1.Irrespective of whether an AI system is placed on the market or put into service independently from the products referred to in points (a) and (b), that AI system shall be considered high-risk where both of the following conditions are fulfilled:

(a)the AI system is intended to be used as a safety component of a product, or is itself a product, covered by the Union harmonisation legislation listed in Annex II;

(b)the product whose safety component is the AI system, or the AI system itself as a product, is required to undergo a third-party conformity assessment with a view to the placing on the market or putting into service of that product pursuant to the Union harmonisation legislation listed in Annex II.

2.In addition to the high-risk AI systems referred to in paragraph 1, AI systems referred to in Annex III shall also be considered high-risk.”

Such AI systems require stricter compliance before they are deployed for use in the market. Some of these include risk assessment and mitigation systems, detailed documentation providing information on the system and its purpose to enable the authorities to assess compliance, provision of adequate information to users, sufficient human oversight measures for minimizing risk, and a high level of security and accuracy measures[4].

3. Limited risk

Limited-risk AI systems need to comply with specific transparency obligations where the users need to be informed that they are using the AI. Article 52[5] covers transparency obligations for certain AI systems. Relevant extracts are quoted below:

“1.Providers shall ensure that AI systems intended to interact with natural persons are designed and developed in such a way that natural persons are informed that they are interacting with an AI system, unless this is obvious from the circumstances and the context of use. This obligation shall not apply to AI systems authorised by law to detect, prevent, investigate and prosecute criminal offences, unless those systems are available for the public to report a criminal offence.…”

4. Minimal risk:

There is another category where the risk identified is minimal. These AI applications can be used freely. However, Article 69[6] promotes the use of the code of conduct for such AIs. Relevant extracts are quoted below:

“1.The Commission and the Member States shall encourage and facilitate the drawing up of codes of conduct intended to foster the voluntary application to AI systems other than high-risk AI systems of the requirements set out in Title III, Chapter 2 on the basis of technical specifications and solutions that are appropriate means of ensuring compliance with such requirements in light of the intended purpose of the systems.

2.The Commission and the Board shall encourage and facilitate the drawing up of codes of conduct intended to foster the voluntary application to AI systems of requirements related for example to environmental sustainability, accessibility for persons with a disability, stakeholders participation in the design and development of the AI systems and diversity of development teams on the basis of clear objectives and key performance indicators to measure the achievement of those objectives….”

While the EU AI Act brings numerous benefits, it also poses certain challenges and concerns:

To comply with the EU AI Act, businesses must adhere to specific requirements and guidelines.

The EU AI Act brings several benefits:

The EU AI Act signifies the EU’s commitment to regulating AI technologies in a manner that prioritizes safety, transparency, and fundamental rights. It has significant implications for various stakeholders involved in the development and use of AI systems. It establishes a level playing field by providing a harmonized regulatory framework across the EU. With careful compliance and adherence to the act’s requirements, organizations can navigate the regulatory landscape and contribute to the responsible development and use of AI.

[1]https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206

[4]https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

The views in all sections are personal views of the author.